Written By: Kano Umezaki

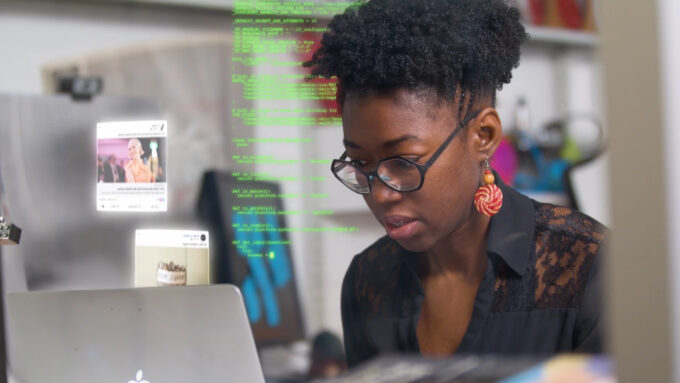

When MIT Media Lab researcher, Joy Buolamwini, finds that face-recognition softwares fail to identify darker-skinned people, she discovers that anti-Blackness is pervasive within the fabric of the digital world.

Shalini Kantaya’s documentary, “Coded Bias” forefronts the voice of Buolamwini in order to make visible what is often veiled: algorithmic racism. With industrial empires increasingly relying on technology, algorithms have been entrusted with a substantial amount of administrative and cultural power. From surveillance technology used to extend the carceral reach of prisons beyond its physical limits, to automated systems reinforcing anti-Blackness through digital redlining, technology is imbued with biases predicated on an ongoing history of white supremacy. Through unveiling the oppressive reality of algorithms, “Coded Bias” prompts us to question the liberties we hold –– or seem to hold –– within a world underwritten and organized by destructive code. “My own lived experiences show me that you can’t separate the social from the technical,” says Buolamwini in the film.

As of now, over 117 million people in the U.S. –– about one in two adults –– have their face stored in a facial recognition network. These faces are susceptible to unwarranted searches by the militarized police, meaning the U.S. operates as a mass-surveillance state. People’s private lives are surveilled as public property, placing us under the chokehold and scrutiny of corporate commercial interest.

The film also mentions that the future of A.I. is vested in the material grip of nine corporations: Alibaba, Amazon, Apple, Baidu, Facebook, Google, IBM, Microsoft and Tencent. In other words, the algorithmic structure of unwarranted mass-surveillance and digital redlining is written and imposed by a few people whose fundamental motive is accumulating capital. The asymmetrical power relations embedded in code leads to predatory marketing strategies that leech on the materially impoverished, most of whom lie at the intersections of being Black, Indigenous, disabled, undocumented, women, trans and fems. It is by no accident, then, that algorithms mirror and magnify an anti-Black, patriarchal world.

At one part, Tranae Moran, a Brooklyn tenant, talks about her experience living with invasive surveillance technology at her apartment in Brownsville. According to Moran, landlords have replaced keyholes with facial recognition softwares to police and harass people whose behaviors are deemed suspicious. Under the guise of safety, punitive technologies often draw borders around, and within, Black and Brown neighborhoods.

Buolamwini, along with other computer scientists and researchers spearheading the movement for algorithmic justice, are compelled to rewrite algorithmic inequality. They continue to push for legal regulation as well as corporate accountability. Kantaya sprucely documents Buolamwini’s research criticizing Amazon’s latest facial technology, where her findings show that facial recognition softwares are least effective on darker-skinned women and most effective on lighter-skinned men. Upon reading Buolamwiin’s findings, Amazon sidelined her research for its supposed misinformed results. “As a woman of color in tech expect to be discredited, expect your research to be dismissed,” says Buolamwini in the film. “If you’re thinking about those funding research in A.I., they’re these large tech companies. So if you do work that challenges them or makes them look bad, you might not have opportunities in the future.”

Kantaya also conducts interviews with prominent figures of the movement for algorithmic justice, including Cathy O’ Neil, author of “Weapons of Math Destruction,” Safiya Umdia Noble, author of “Algorithms of Oppression,” and Amy Webb, author of “The Big Nine.” The voices present on-screen are predominantly women, particularly Black and other racialized women, who act on building a technological present that doesn’t exist or evolve for profit. Director Kantaya shares in an interview with Seventh Row, “I’m certainly conscious, as a woman of colour, who I position as thought leaders and experts in my films. I tend to focus on voices that are often out of the dialogue, but this was different. This was different because I actually think the people who are leading this movement are women and people of colour.”

Perhaps one of the most compelling aspects of the production behind “Coded Bias” is its implementation of digital effects that simulate the surveillance-state. For one, Kantaya begins the film with a tweet from Tay, a Microsoft A.I. chatbot based off of teenage girls’ data. Within sixteen hours of her Twitter launch, Tay became extremely xenophobic, racist and sexist, meaning machine learning is prone to replicating inequalities that already exist. The same machine-learning technology encoded in Tay is also encoded in automated systems that determine prison sentences, credit limits, health care access and job acceptances, among other decisions that affect our lives in material ways. Tay’s presence in the film, then, imposes the question: Do we want these types of machine learning softwares to regulate our lives?

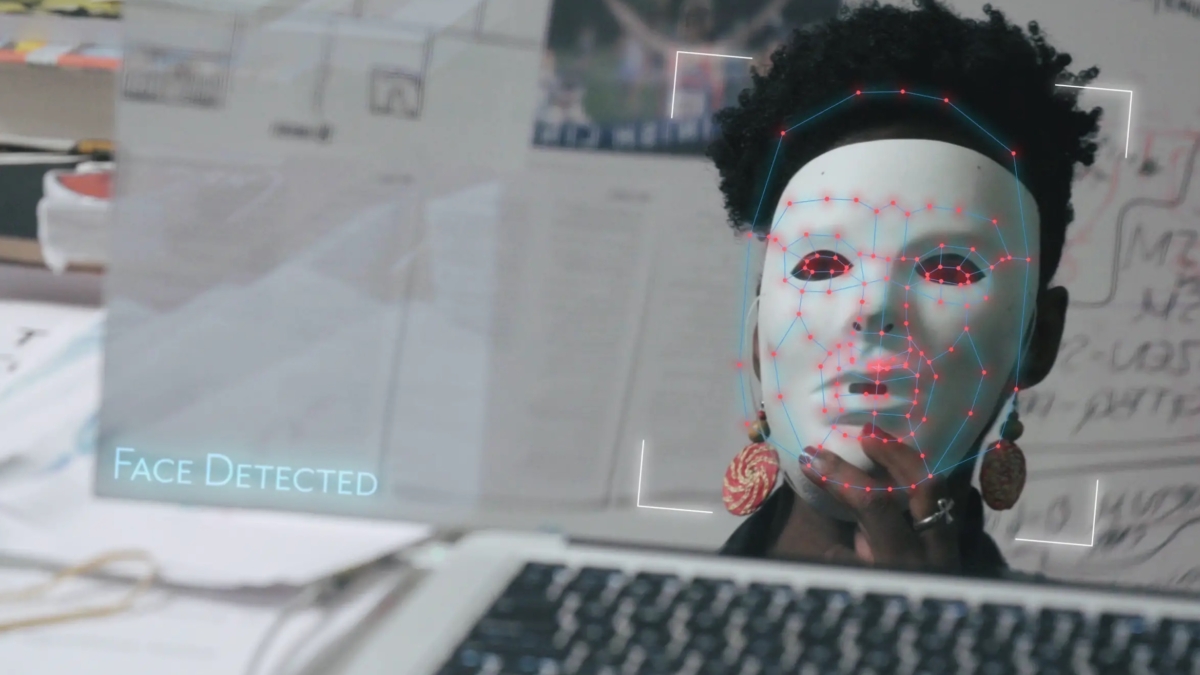

Kanatya also adds footage from the vantage point of a surveillance camera, making visible the systems we cannot see. She layers these surveillance footages, along with seemingly normal interview cuts, with face detecting simulators. The act of casting a digital layer on faces positions the audience as potential subjects for witness –– a terrifying disposition to realize oneself in.

The underlying crux of algorithmic oppression lies in its initial inception. As the film describes, artificial intelligence began at the Dartmouth math department in 1956 amongst a group of eleven white men. These men held the power to mold what artificial intelligence looked like and could be, meaning their racist biases have immortailized itself through code. “When you think of A.I., it’s forward looking; but A.I. is based on data and data is a reflection of our history. So the past dwells within our algorithms,” says Buolamwini in the film. “Coded Bias” shows us that not all technology is created in response to a need; rather, a great onset of technology is created to sustain racial capitalism. Technology, regardless of its form, is a means for capitalism to continue maximizing profit –– at the expense of social demise –– while naming its cause as “progress.”

While Kantaya’s film exposes the consequences of algorithmic oppressions within the neoliberal apex that is America, she also situates her gaze transnationally –– namely to the U.K., Hong Kong and China. At one point, she intercuts footage of the CIA-backed Hong Kong protests, in an attempt to draw connections amongst surveillance technology across borders. She also documents Big Brother Watch, a U.K. organization that monitors facial recognition technologies used by British law enforcement. Through a public experiment, they found that facial recognition A.I’s only had a 2% accuracy rate in the Metropolitan police force, and only an 8% accuracy with the South Wales police.

Kantaya’s “Coded Bias” is a ground-breaking visual feat which speaks to the imminent, but hidden, threat of anti-Black machine learning software. Through documenting the voices carried by powerful women such as Joy Buolamwini, Kantaya helps center communities whose lives have been abstracted and harassed by algorithmic oppression.

“Coded Bias” is screening at the 43rd Asian American International Film Festival. Ticket and screening information can be found here.